Report from the 2017 QCTools Tester Workshop

On April 21st, 2017, audiovisual preservationists from all over the United States – and Ireland – convened in sunny San Francisco to test the new features of QCTools and the upcoming SignalServer application. We asked participant Bleakley McDowell, Media Conservation & Digitization Specialist at the Smithsonian’s National Museum of African-American History and Culture to write about his experiences (and show off our rooftop photoshoot). The workshop’s agenda and notes can be found here.

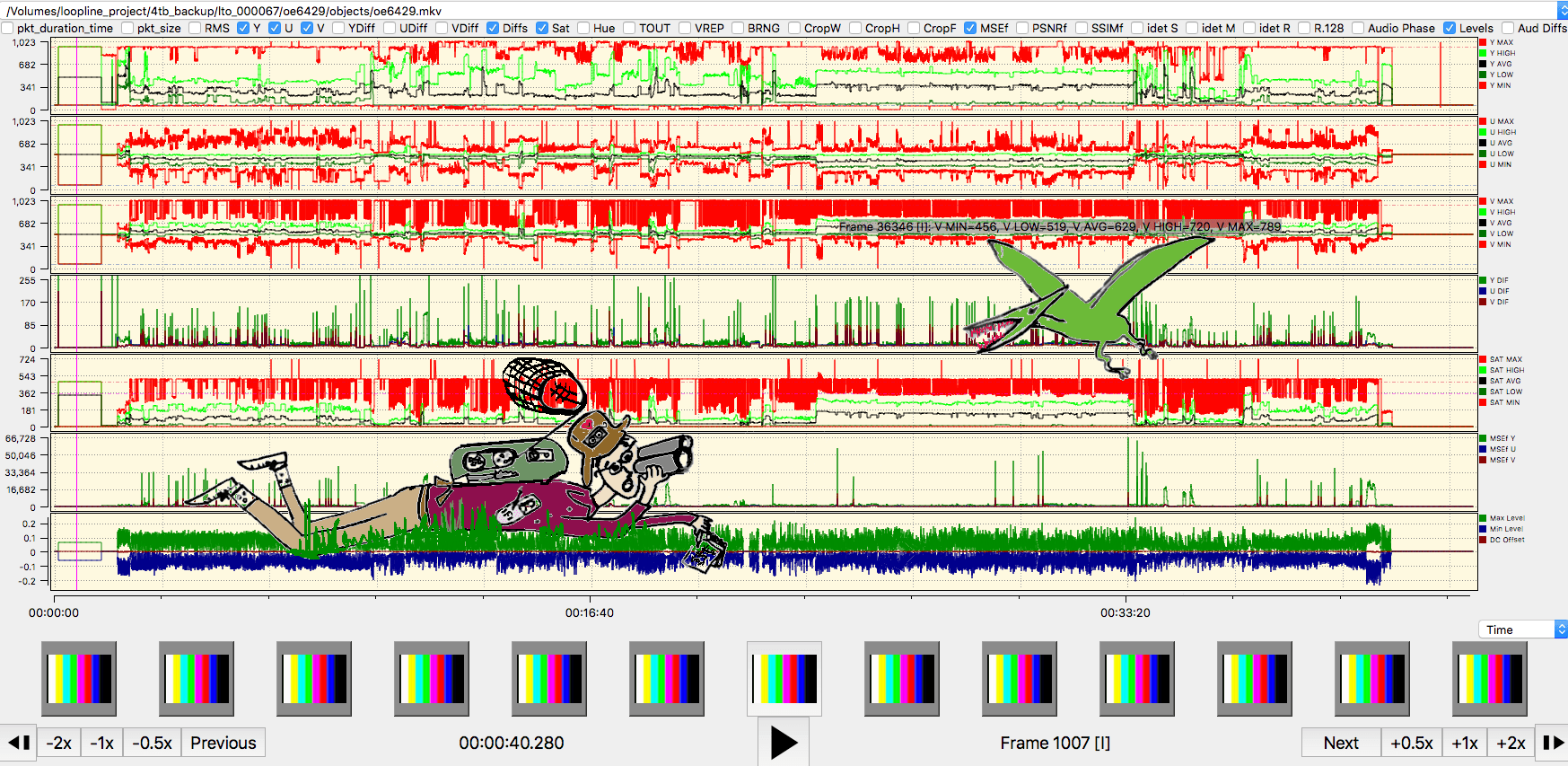

Selection from “Guess the QCTools Filters” icebreaker game (answer: Bit Plane)

Facing a large number of tape-to-file video transfers that need to be analyzed for errors before moving onto long-term digital storage can be daunting. Whether received from a vendor or created in-house, any archivist/conservator/preservationist charged with the task is going to wonder how to get it done efficiently; there’s simply not enough time to watch each video in its entirety from beginning to end.

Fortunately, great minds faced with this very issue have come together to create a set of tools to deal with this vexing problem of video tape-to-file quality control. In the Mission District of San Francisco, the Bay Area Video Coalition held a two-day workshop on April 21st and 22nd where the developers of QCTools discussed the most recent release with a group of 12 individuals from the field of audiovisual preservation. The developers of QCTools demoed some new features and invited feedback from the attendees about how they used QCTools and what they would next like to see developed in the application. Along with the updates to QCTools, two new tools to be used with QCTools – qcli and SignalServer – were also showcased and demoed. SignalServer is a web-based application that collects data from of QCTools reports for large-scale analysis and qcli is a command-line tool for creating QCTools reports and uploading them to Signal Server.

The workshop opened Friday morning with a game of “Guess the QCTools Filter.” I was unable to guess a single one correctly but the game got us ready for looking at the components that comprise a video in any number of unusual ways. Following that opening fun, Kieran O’Leary from the Irish Film Archive talked about using QCTools in his workflow and contributing to the project via GitHub. The two takeaways for me were: QCTools won’t tell you anything unless you learn to use it to hunt what you are looking for and contributing to the project on GitHub, even if just reporting bugs, is essential and everyone who uses QCTools should be doing it. Thanks Kieran!

Kieran hunting for Jurassic video errors in QCTools, illustration by Emily Boylan.

Up next were Morgan Morel from George Blood Audio/Video and Brendan Coates from UC Santa Barbara Libraries. Morgan talked about how QCTools could fit into the the high volume workflow of George Blood where everything has to be automatable. Since QCTools is very labor intensive it is not fully integrated into their workflow presently but with the addition of new tools like qcli and Signal Server which allow more batch processing and automation, it very well could be soon. There was also a discussion around setting thresholds for various filters at which to consider a file to be problematic. Brendan spoke about QCT-PARSE, a python script he developed that works on batch processing QCTools reports.

Next we heard from Kathryn Gronsbell the DAMS manager at Carnegie Hall. Their QC workflow can be found here. Carnegie isn’t using QCTools at this time, but Kathryn made a good argument for transparent use/business cases to help organizations jump on board. Finding a way to reduce time spent on QC and having a controlled vocab to describe issues would especially helpful.

After we all took a break for a delicious San Francisco lunch in lovely sunny weather, Dave Rice, the lead developer of QCTools, gave a brief history of QCTools from its earliest beginnings in 2014 to the newest features (a filter for viewing VITC & CC data!) in version 0.8.

The rest of the afternoon was spent working with qcli. Ashley Blewer, a developer and consultant on the QCTools project, led this part of the workshop. The qcli command-line tool is a wonderful addition to QCTools. Much of what we learned about it at the workshop can be found here. qcli is particularly great for the batch creation of QCTools reports, something that NMAAHC will make use of. qcli also has the option to automate the uploading of QCTools reports to SignalServer, which will undoubtedly be useful for those implementing SignalServer.

The second day of the workshop started promptly at 9:30am as we reviewed some data from our breakout session from the previous day. Glenn Hicks from Indiana University’s Media Digitization & Preservation Initiative (MDPI) followed up with a presentation about the workflow of IU and Memnon in digitizing the entire IU audiovisual collection. QCTools is a big part of the QC workflow at IU and Glenn seemed particularly pleased with the abilities of SignalServer since batch processing QCTools reports would greatly aid in speeding up the process at IU. The other main takeaway from Glenn’s presentation is that IU and Memnon have some very swank facilities pumping out lots of digitized av material – at peak per day: 9TB, 320 hours, 914 files. Wow!

We spent the majority of Day 2 learning about and testing SignalServer. Yayoi Ukai, the project’s web developer, gave us an overview of how SignalServer is built and runs on a server. Basically, it rests on an application called Docker. This part of the workshop stretched the limits of my knowledge about how web servers and applications rely on one another to make things work, but it was fascinating to learn about. Ashley then led a walkthrough of how to use SignalServer and we learned once QCTools reports (xml.gz) are uploaded to SignalServer, they can be grouped together and criteria can be set to analyze them.

Criteria thresholds can be set for any filter that QCTools uses – TOUT for instance – and once SignalServer is done analyzing the reports it produced a graph showing how the reports compared to the criteria thresholds set. If a TOUT threshold of .06 is set the resulting SignalServer graph will plot where the TOUT value of each report falls in relation to .06, thus quickly allowing with anomalies for that particular filter to be recognized. The process is similar to the way MediaConch applied policies to large groups of files altering the user when a file passes or fails the criteria of a given policy. My time spent with SignalServer is limited but it is easy to see how amazingly helpful this tool will be when faced with having to QC large amounts of video files.

The final part of the workshop was spent talking about what new features we would like to see in QCTools. The ability to add markers and comments to the QCTools graphs layout and save settings/templates for users were the two most requested features. Look for them in 0.9!

Participants in the 2017 QCTools Tester Workshop, from left to right: Bleakley McDowell (National Museum of African-American History and Culture), Kieran O’Leary (Irish Film Archive), Morgan Morel (George Blood Audio & Video), Laura B. Johnson (BAVC Media), Michael Angeletti (Stanford Media Lab), Brendan Coates, Erica Titkemeyer, Kathryn Gronsbell (Carnegie Hall), Ashley Blewer (New York Public Library, QCTools), Karl Fitzke (Cornell University), Glenn Hicks (Indiana University), Yayoi Ukai (QCTools), Kelly Haydon (BAVC Media), David Rice (CUNY TV, QCTools), Kristin Lipska (California Revealed), Ben Turkus (New York Public Library), Charles Hosale (WGBH).