PAR, SAR, and DAR: Making Sense of Standard Definition (SD) video pixels

By Katherine Frances Nagels

It’s well-known that while motion picture film has seen many different aspect ratios come and go over its history, video has been defined by just two key aspect ratios: 4:3 for analogue and standard definition (SD) video, and 16:9 for high definition (HD) video. Simple, right? Yes—but underlying this are some aspect ratios that are not so straightforward: those of the video pixels themselves.

These days, we are very used to thinking of each pixel in an image or video being the same width and height, i.e., square—and this is indeed the case for the vast majority (if not all) video produced today. But things are a bit different for SD video. SD pixels are not square, and what’s more, they are non-square in two different ways—PAL-derived video pixels are fat/short, while NTSC-derived pixels are tall/thin.

Source: AV Artifact Atlas

To understand why, it’s necessary to take a walk through history and look at the development of Rec. 601/BT.601 (1982), the broadcast standard for encoding interlaced analogue video signals in digital form. The development of Rec. 601 began in the late 1970s, a time when digital interfaces were used increasingly in studios, but when digital videotape was still on the horizon. It was clear that a digital video standard would be needed in order to create a smooth path between the analogue and the digital, and to ensure interoperability with existing equipment. If analogue video signals were to be passed through digital equipment (and vice versa), the sampling rate of the video signal (among other factors) would need to be set, so that equipment could be used with predictable and repeatable results.

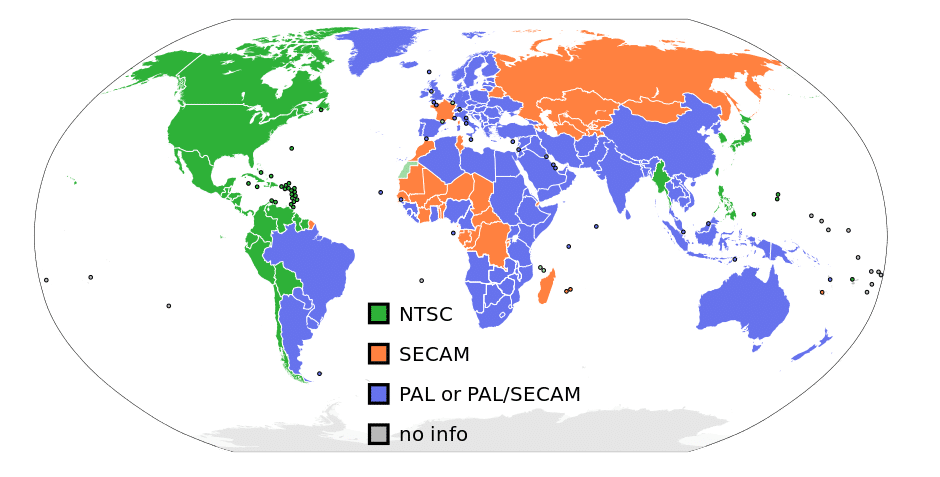

In the late 1970s, the Society of Motion Picture and Television Engineers (SMPTE) began working on a digital video standard that would set parameters in this sphere; similar conversations were taking place in Europe, with the European Broadcasting Union (EBU) also investigating digital video coding. It was quickly realized that a worldwide standard would be advantageous. However, this was far from straightforward, given that several incompatible analogue video systems were in wide use at the time.

Analogue video signals come in two main flavours. Countries with 50Hz power mains adopted a 625-line video signal with a speed of 50 fields per second, or 25 full frames per second (fps). Of these 625 lines, 576 scan lines are active video, the rest carrying synchronizing information, closed captioning, etc. The 625-line video signal generally used the Phase Alternating Line (PAL) system to encode colour information1, and consequently, in casual usage 625-line/25fps video is often referred to as ‘PAL video’.

Another group of countries, including North America, used a 525-line video signal (of which 486 scan lines were active video). The frame rate falls just slightly under 30fps (≲ 60 fields per second), corresponding to the 60Hz power supply. The 525-line video signal generally used the National Television System Committee (NTSC) colour encoding system 2 , and therefore 525-line/29.97fps video is often casually referred to as ‘NTSC video’.

Source: Wikimedia Commons

Although Rec. 601 was also looking at other key issues such as colour encoding3, an urgent matter was the horizontal sampling rate of the video signal. Because of the set number of scan lines, the vertical resolution of the video frame was already fixed: 576 (for the 625-line system) or 486 (for the 525-line system). For mathematical reasons, in the 525-line system, a slightly smaller vertical resolution of 480 was adopted for SDTV/digital broadcast.

Yet each horizontal line of the video frame is an analogue (continuous) signal, and therefore could potentially be sampled at any of a number of rates. For technical reasons relating to the frequency of the sound carrier, a multiple of 4.5 MHz was preferable, and after much deliberation, a sampling rate of 13.5 MHz was agreed upon by the authors of Rec. 601. This sampling rate applied to both 625-line and 525-line video, and yielded 720 samples (pixels) across the width of the image4.

I used the terms ‘PAL-derived’ and ‘NTSC-derived’ above, but now that we have exited the analogue domain and entered the SD/digital realm, these two main video systems can be more correctly referred to as 576i and 480i (the i denoting that it is an interlaced signal).

We now have two video resolutions: 720×576 and 720×480, and we know that the aspect ratio of the video frame is 4:3. Yet, it’s clear even at a glance that these two dimensions cannot both produce a 4:3 image. A closer look and a quick maths equation reveals that in fact, neither of these frame dimensions are 4:3!

And this is where the non-square pixels come in. In effect, SD video is slightly anamorphic: in order to meet the specifications of Rec. 601 and also fill a 4:3 screen, SD pixels are ‘thin’ or ‘fat’.

This can most easily be understood via a visual comparison. I’ll use a shot of Toshiro Mifune from The Seven Samurai (1954) as an example.

On the left, we have the image correctly shown at 4:3. On the right, shown as a 720×480 image (scaled) that simulates how 480i appears squished if it is shown with square pixels.

But 480i pixels are higher than they are wide, with a pixel aspect ratio (PAR) of 10:11. Since the pixels are ‘tall’, the image stretches out, filling the 4:3 frame and appearing as on the left.

What about 576i pixels? It’s the reverse.

On the left, Toshiro looks good at 4:3. On the right is a 720×576 image (scaled) that shows how a 576i image would look if shown with square pixels. But since the pixels are ‘flat’, or ‘fat’—wider than they are tall—the 576i image ‘shrinks’ down vertically to a 4:3 image.

576i ‘wide’ pixels have a PAR of 59:54.

Here’s a diagram showing the relative proportions of each type of pixel:

![]()

And how does your video playback software know to play the video file so that it looks right, and isn’t squashed or stretched? The answer is because of the technical metadata embedded within the video file, written into it at the time of creation. There are three parameters to consider:

- Storage aspect ratio (SAR): the dimensions of the video frame, expressed as a ratio. For a 576i video, this is 5:4 (720×576).

- Display aspect ratio (DAR): the aspect ratio the video should be played back at. For SD video, this is 4:3.

- Pixel aspect ratio (PAR): the aspect ratio of the video pixels themselves. For 576i, it’s 59:54 or 1.093.

All this sounds quite convoluted, and one might start to wonder anew why the Rec. 601 team didn’t just go with square pixels in the first place. However, as mentioned before, when the standards were drawn up, other requirements precluded this. It also pays to remember that this was before the era of digital monitors and computing that we now take for granted. While Rec. 601 was forward-looking, it was written in the context of a workflow where everything was ultimately viewed on a cathode-ray monitor, whether in the studio or by the viewer at home. Videos didn’t ‘need’ to have square pixels, because it just wasn’t relevant to most viewing contexts.

However, because it is possible to sample at different rates than 13.5MHz/720px—or to resample/edit existing videos—one quite often sees ‘square-pixel SD’ videos, usually with dimensions of 640×480 or 768×576. 5

So, why care about all this? Precisely because there is a lot of analogue videotape out there that requires digitization, and since it will probably be transferred at 720×4866 or 720×576—as is best practice for preservation—it pays to understand where those numbers come from. The SD videotape formats (for example, DigiBeta) that succeeded their analogue counterparts also adhere to the parameters of Rec. 601, so knowing the analogue backstory is important to fully understanding those formats.

Luckily, in terms of pixels and aspect ratios, the advent of HD video simplified things considerably. It should be noted that some HD tape formats (for example, HDCAM) do in fact use non-square pixels. However, the standard for HD video broadcast, Rec. 709, firmly established square pixels as the broadcast standard going forward—thus ending a lot of headaches.

Katherine Frances Nagels is an audiovisual archivist and preservationist. A graduate of the Universiteit van Amsterdam MA programme Preservation and Presentation of the Moving Image, she has most recently worked at Archives New Zealand Te Rua Mahara o te Kāwanatanga, where she worked on a major film preservation project, as well as contributed to video preservation initiatives. You can find her on Twitter and Github. .

Further Reading

- Rec. 601— the origins of the 4:2:2 DTV standard. Stanley Baron and David Wood. EBU Technical Review, October 2005.

- Square and non-square pixels. Chris Pirazzi. Lurker’s Guide, 1999-2015.

- Pixel Aspect Ratio, part 1 and part 2. Chris & Trish Meyer. Artbeats. No date.

- Charles Poynton – square pixels and 1080. Video where Charles Poynton discusses the origin of square pixels in HD video. 2012.

*Thumbnail image by Flickr user Soupmeister

Footnotes:

1. An exception is France and Francophone countries, which used the 50Hz/625-line system, but a different colour encoding system called Séquentiel couleur avec mémoire (SECAM).↩

2. An interesting exception is Brazil, who used a 60Hz/525-line system, but encoded colour using the PAL system. This variant is known as PAL-M.↩

3. Rec. 601 was, in fact, the origin of the 4:2:2 chroma subsampling scheme still in wide use today. Although chroma subsampling is not the focus of this article, it’s important to knowledge that the number of 720 used here refers to the sample rate of the luma (Y) portion of the component video signal. Each colour-difference signal (Pb/Pr) is sampled at half that rate. ↩

4. To be more precise, 13.5 MHz sampling actually yields 702 ‘active’ pixels for each scanline, but in practice 720 (or sometimes 704) pixelsare captured. This rounded-up figure of 720 pixels slightly oversamples the video signal, leaving a little ‘headroom’ by extending a bit into the horizontal blanking range at each end of the scan line. This allows for slight deviations in timing in the video signal, as well as provides a round number for ease of standardization and manufacture. ↩

5. More information on the topic of square pixels and other conundrums of playback presentation can be found in Dave Rice’s Sustaining Consistent Video Presentation.↩

6. Many analogue-to-digital converters and interfaces work with 480 lines, meaning that 6 lines are dropped. However, when digitizing analogue video, it is best practice to retain all 486 lines of the original video signal. Digital video sources are likely to have only 480 lines of active image, as described above.↩